Recap of Vibe Coding in May 2025 | How I Built a Full Product with 10k Lines of Code in One Month

What I learned from building the AI podcast tool - deepdialogue.pro with AI.

Update: Ignore and forget everything I wrote, just use the latest version of Claude Code.

Context

About a month ago, I saw a post from Canva's CTO Brendan Humphreys:

No, you won't be vibe coding your way to production.

Not if you prioritise quality, safety, security, and long-term maintainability at scale.

This resonated with my observations of vibe coding at that time, but I wanted to know for sure, and if indeed vibe coding wasn't there yet, what was missing and what strategies we could use to fill the gap.

And there's no better way to find out than by actually building a production-level product with AI.

Result

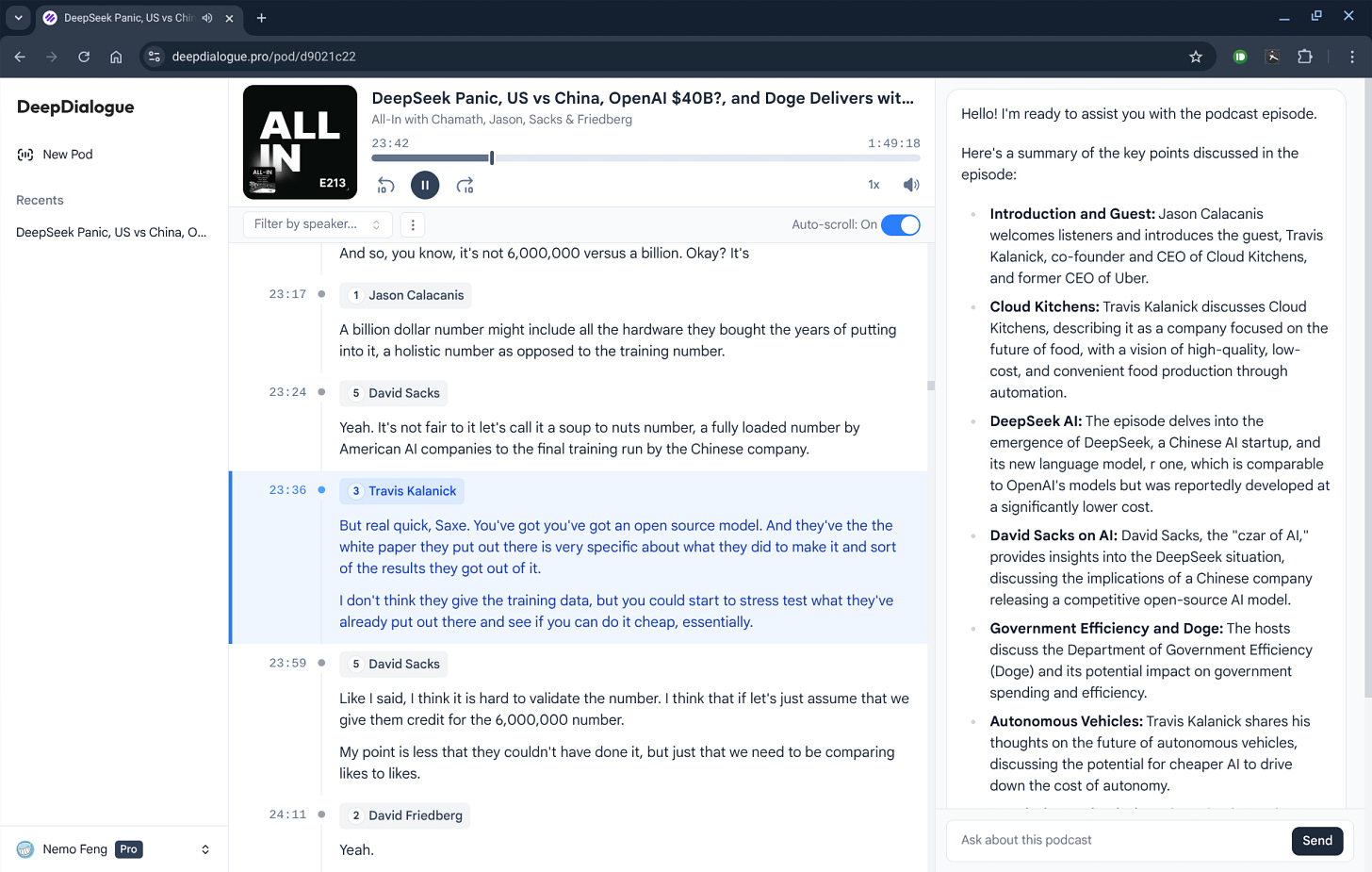

Screenshot of the product I built in one month:1

The idea came from my own pain points when listening to long, conversational podcasts (e.g., All-In, Lex Fridman). I wanted something to help me follow conversations effortlessly and allow me to easily navigate through time and different speakers to uncover hidden insights.

So I built this AI podcast tool called DeepDialogue that converts audio into clearly speaker-diarized transcripts with handy features like speaker filtering, active highlighting, auto-scroll, and quick navigation. Most importantly, it includes an AI chatbot that has complete understanding of the podcast content to answer any questions—whether you need summaries or want to extract specific speaker viewpoints.

Try it: https://www.deepdialogue.pro/

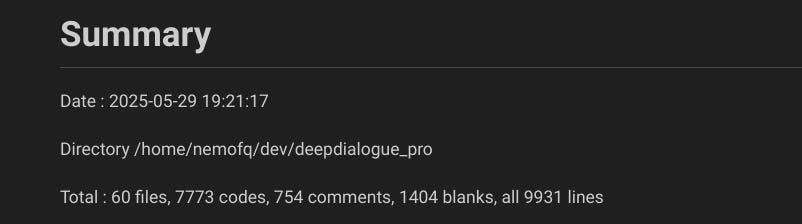

This is a substantial project spanning nearly 10,000 lines of code, built with a tech stack including Next.js framework, React for the frontend, and a hybrid Node.js and Python backend.

Tactics

Here are 6 useful tactics I found in this journey for vibe coders.

1. Define your end goal

There are actually two types of vibe coding, and people often mix them:

For people who can program: To help them ship faster. Typical products include Cursor, Windsurf, and Augment Code.

For citizen developers: To help them create without learning coding, like Bolt.new and Lovable.

There's some overlap/ambiguity in between.

For example, if someone wants to build a tool to track the latest stock market movements and news for certain tickers, both Cursor and Lovable could help them get it done. But it's important to define your end goal and identify who you are before choosing the appropriate path, to avoid wasting time and resources on the wrong one.

If you haven't written code in the past and want to create something for yourself and friends without complex login/payment/database/API interactions, the tools I mentioned for citizen developers would be really amazing. Even universal agent products like Manus and Genspark fit this category in my view, since they essentially turn each task into a "program" as output.

Personally, I don't have much experience with tools for citizen developers, but I do feel that the current bottleneck is not the LLM (at least not the biggest one anymore), but rather the following two issues:

How to integrate with third-party systems (e.g., databases/storage/auth/payment/APIs)? Traditional providers are overkill here. If someone could define a payment capability that every website can have with a prompt or two, it could be the new Stripe. MCP could be one answer here.

How to distribute people's creations? We need a new App Store and Google Play for this. Happy to see new products like YouWare appear in this domain.

For coding agents designed for programmers, context window (or effective context window) limitations are the culprit behind many issues.

2. Choose the best model

Choose the best model, not the tool. Whether it's VS Code derivatives like Cursor or Windsurf, or plugins like Augment Code and Cline, I don't think they make huge differences beyond user preferences and interaction details. For example, in this project, because of the huge amount of token consumption needed, I simply used the customer-facing Claude and Gemini chatbots with Sublime editor. But don't get me wrong here—they still matter a lot for competition, adoption rates, and accumulating data for better models and products.

Which LLM is the best for coding might be the easiest question among the community: Gemini 2.5 Pro = Claude (3.5/3.7/4) > All Others.

From my interactions with these top two models, I do have some comparisons to share:

Gemini 2.5 Pro:

Pros:

Larger context window, allowing you to follow up on your tasks multiple (typically 10+) times without degraded intelligence

Great at logic problems, like debugging business logic issues

Cons:

Possibilities of losing control over language preference—you'll see all sorts of languages (e.g. Spanish, German) appear (good news is this doesn't affect the code part)

Possibilities of getting stuck at "Show thinking"—re-sending the message could fix this

Not so good at UI, often uses more complex methods to achieve the same design

Writes too many and overly long comments in my opinion (internal habit/requirements at Google?)

Claude (3.5/3.7/4) :

Pros:

Can write concise and beautiful UI code

Faster response (without thinking)

Cons:

Effective context window is quite small—you'll notice significantly degraded performance when conversations become long and you're forced to create a new chat

For complex logic problems, it will give you multiple options, but more often than not, none of them work, which causes more disappointment if you actually tried every option

If you don't know which model to choose? Simple—just copy and paste your prompt to both of them, get some coffee, and come back to compare which one you prefer :)

3. Start from a template

If only one piece of advice were allowed, this would be what I give to vibe coders starting a new project.

The root cause of this issue is still the context window limitation of LLMs. Because of context constraints, once your project is underway and has accumulated some amount of code, it becomes impossible for the LLM to read all the project files and code, identify the connections and data flow between components, and then implement new features or fix bugs.

The context window would simply be overloaded.

For instance, I once needed to fix a height inheritance issue in UI code (which is quite common in Tailwind CSS), but it required editing multiple files along the component tree. I gave up after trying to use the model several times and ended up manually fixing it myself.

To make matters worse, LLMs naturally tend to scatter code everywhere when implementing one feature. All of this could be avoided if you start from a template, which helps establish clear, independent positioning for each module—resulting in the LLM only needing to work within a single block or file of code.

It's like hiring a human architect, so I recommend everyone do this. It's worth spending some time to find the best template that suits your case before writing your first line of code, or prompt. Here are two templates I recommend:

Next.js SaaS Starter by Vercel

Next.js + Stripe + Supabase Production-Ready Template from my friend Sean

4. Code backwards

"Working Backwards" is a well-known product development approach invented by Amazon and later adopted broadly by other big tech companies like Airbnb and ByteDance. The key tenet of this process is to start by defining the customer experience, then iteratively work backwards from that point.

In vibe coding, we can "code backwards." Just throw every token you have at the problem—prompt, copy/paste code to see if it works or not, then come back and prompt again, until you have a working version. I call this process "Punch Through."

Based on the working version, now you can read it to understand how to optimize the code, adjust unnecessary parts, add details, and ultimately achieve code that meets your requirements and is maintainable going forward—rather than the overly complex, unclear logic that LLMs directly produce.

5. Make every shot long and count

Because of the nature of how LLM chat manages context—accumulating with every round of conversation, which means every time you send something you're sending the whole conversation history with your latest prompt—plus the current context window limitation, you can expect the first couple rounds of conversation to be the most intelligent, or even just the first one to be most effective.

So instead of sending short messages to waste the context window, just prepare your prompt to be as comprehensive as possible, and don't worry about it being too long. This might be counter-intuitive, but it's actually a more effective prompting approach.

When working on this project, I frequently wrote prompts of thousands of lines of text, including multiple code files and detailed requirements, desired data flow, etc., and it just works, especially for your first one or two rounds of conversation.

6. Use AI-friendly service/SDK

My definition of AI-friendly for services/SDKs has two aspects:

Comprehensive, detailed documentation: This means LLMs could have already learned a lot during pre-training, and you can easily find and send the latest updates if the LLM doesn't have it.

Simplified permission management: This frees LLMs from having to navigate through the hierarchy of traditional permission/authentication management. In my experience, they're really not good at it—same as human developers.

So here are some choices I would highly recommend you try:

Vercel for hosting

Supabase for database and auth

AI SDK by Vercel for integrating LLM-based features like chatbots into your product

Unfortunately, I don't find any billing/payment solution to be super-easy for vibe coding projects, so let me know if you have recommendations here.

Recap

Apparently, the "context window" is the most important factor in LLMs for vibe coding now. I can't wait to see a model achieve breakthrough here along with improved intelligence.

And lastly, try the product of my vibe coding journey here:

Weekends and evenings only - I estimate it was roughly equivalent to 1.5 weeks of full-time work.